Step 1 - Open Audio MIDI Setup The first thing we'll need to do is configure the OS X Audio MIDI Setup utility - Logic needs that configuration to know how to route your external MIDI instrument into and out of Logic itself. So, open up the Utilities folder in your Applications folder, locate Audio MIDI Setup.app and double-click it to launch it. Live Loops For spontaneous composition. Live Loops is a dynamic way to create and arrange music. How to bounce (convert) a MIDI part to an audio file in Logic Pro X. New Sounds for Your Music: Courses to Level Up your Skills.

Most DAWs come with a time & pitch editing feature nowadays, and often may include another useful capability—the option to convert an audio recording to MIDI.

There are a number of reasons to do this—a common application is drum replacement, but here I'm going to talk about converting melodic audio recordings to MIDI. A typical scenario might involve a musician whose main instrument is not keyboard—for example a guitarist or sax player, or even a vocalist.

Instead of struggling to get a good keyboard performance which may not represent the best that player is capable of on his/her own primary axe, that musician can record parts with greater dexterity and musicality on their own instrument, and then convert those performances to MIDI. While there are specialized MIDI guitars and wind instruments, these can be pricey and often require the player to master a new playing technique, so the ability to take a recording made with a familiar, comfortable instrument and use that to trigger various MIDI-based sounds can be a very welcome option.

Audio->MIDI Applications

Converting audio to MIDI may also come in handy when a part—say, an electric bass track for example—might need more than just EQ and compression; perhaps a fingered bass would sound better with a pick bass sound, or even as an acoustic bass. Once the audio recording is converted to a MIDI sequence, different bass sounds could be substituted until the ideal one is found. Apk file finder. A guitarist could even play a bassline on the lower strings of his instrument, convert to MIDI, and drop the pitch an octave, getting the benefit of a more characteristic fretboard-based performance.

Sometimes simply doubling a part by assigning the converted MIDI track to another instrument can help fill out an arrangement more effectively than electronic doubling of the original instrument. The combination of different timbres—the original part plus the sound of a suitable virtual instrument triggered by the MIDI version—can add a much richer character to the musical arrangement than just a little extra delay/chorusing would.

More creative applications are possible as well. A wind player might record, say, a sax part, convert to MIDI, and then copy the MIDI version several times, assigning each copy to a different brass instrument—bari sax, trumpet, trombone—creating a full brass section. Transposition can be used for fuller chords, and possibly a little delay for a more natural, human sense of ensemble timing, while still benefitting at least to some degree from the fluidity of the original performance on the real instrument. I used to work with a vocalist who would improvise a number of wordless vocal parts, covert them to MIDI, and then use those sequences to trigger all sorts of pads and thick, evolving sounds, building up entire arrangements without ever touching a keyboard.

In Practice

Of course, in practice it's often nowhere near as easy to achieve good, musical results from a converted audio-to-MIDI track as the rosy description above might suggest. It's not unusual to have to substantially massage either the audio before conversion, the MIDI after conversion, or both, to get natural-sounding, musical performances out of the virtual instruments that are triggered by the converted MIDI sequences. And many of the more expressive gestures made by the player with the original, acoustic instrument will simply not translate—or at least not translate directly—to the MIDI-triggered part. But the potential benefits may still be worth whatever extra effort might be needed to get the best results.

Let's take a brief look at the basic process of audio-to-MIDI conversion, and a few of the issues that may come up. I'll use the audio-to-MIDI feature built into Logic as an example.

Flex Pitch->MIDI

Besides a dedicated audio drum-to-MIDI function, Logic can convert melodic audio recordings to MIDI as well, via its Flex Pitch editor. As most Logic users already know, Flex Pitch analyzes a monophonic audio recording, determines the notes' pitches, and displays them for editing. Like (almost) all such pitch editors, it's designed to work only with monophonic melodies—no chords—and this limitation applies to the audio-to-MIDI conversion as well, which is derived from the Flex Pitch analysis.

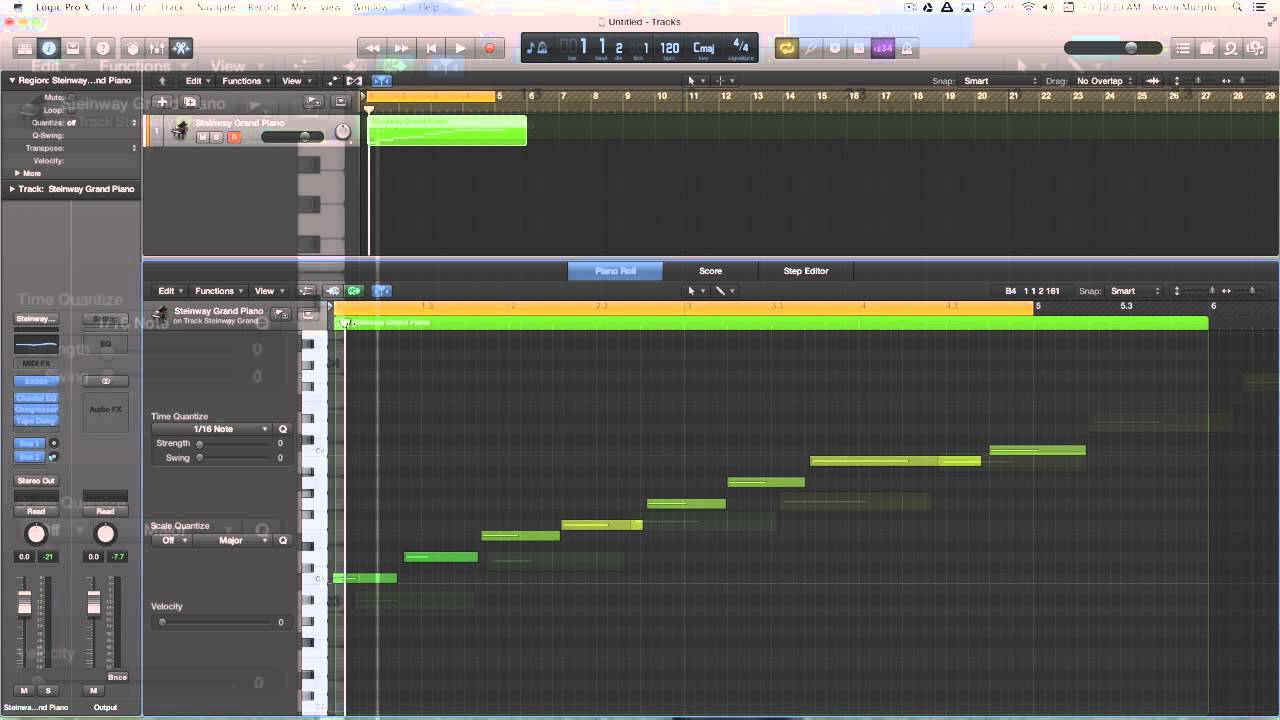

The actual process of creating a MIDI track from an analyzed Flex Pitch track is very simple. The first step is to enable Flex Pitch for the source audio track. Once the track's been analyzed and the Flex Pitch note bars appear, then you'd select the command 'Create MIDI Track from Flex Pitch Data' from either the main Edit menu or the local Edit menu in the Editor pane.

Logic will automatically create a new Instrument track (with the default Instrument) right below the audio track, with a Region containing the newly-extracted MIDI data. You can then change the default Instrument to your preferred Instrument, or simply drag the new MIDI Region to a preferred Instrument track. Bluestacks for win8 32bit.

A new MIDI track converted from the Flex-Pitched audio track just above

Fine Tuning

The new MIDI data will contain MIDI notes for each pitch detected by the initial Flex Pitch analysis; the timing of the MIDI data will follow the timing of the notes in the audio recording. If some note pitches are mis-detected, or need to be split into separate notes, or if stray noises result in false/off-pitch notes, the appropriate edits can be made either in the Flex Pitch Editor before converting to MIDI or in the Piano Roll Editor afterwards.

Sometimes the note-start points detected in the Flex Pitch analysis might be a little late (allowing for the time it takes for each note's initial attack to settle into a steady pitch)—as a result the converted MIDI sequence might sound a little late against the rest of the track or if doubled with the original audio recording. That's easily remedied—just advance the MIDI Region by a couple milliseconds (or apply a negative delay in the Region Inspector) to correct for any lag.

If the timing of the original recording is a little imperfect, you'll have to decide if it's a musical imperfection that'll add a little welcome humanity to the new MIDI part, or if it's too sloppy. You could choose to manually correct timing or apply quantization to the original audio before converting to MIDI, but you might feel that there's a little more flexibility converting the audio as is, and applying any auto-correction of timing independently to the original audio and the new MIDI parts—especially if doubling them—possible utilizing partial-quantization or Logic's MIDI-only Smart Quantize option to dial up a less-than-perfect but more natural timing relationship between the doubled tracks based on that same original performance.

Logic Pro Convert Audio To Midi

Dynamics

Besides pitch and timing, the levels of the audio notes will be interpreted as MIDI Velocity data, with an appropriate Velocity value for each new MIDI note. Safari save as pdf.

The Velocities of the converted MIDI notes are shown as different colors in Logic's Piano Roll Editor

For percussive instruments, that should be all that's needed for an appropriately musical MIDI track. So if an audio recording of, say, a bass was converted to MIDI, the MIDI bass notes will reflect the dynamics of the original performance when they're used to trigger a different bass patch, or a different doubled instrument.

But with some audio melodies there may be other considerations that may make it a little more difficult to get a appropriate musical performance from the new MIDI/Instrument track. First off, Flex Pitch interprets the range of MIDI Velocity values from the dynamic range of the audio recording, setting a range of Velocities between 0 and 127. But there may or may not be any musical relationship between the actual dynamic level variations of the original audio track and the dynamic response of the Virtual Instrument being triggered by the new MIDI sequence—as a result, as often as not you'll have to tweak the Velocity range of the MIDI/Instrument track. If you're a programming maven you could do this by adjusting the Velocity response of level and tone within the Instrument itself, but an easier solution would be to tweak the Velocity range of the MIDI data.

In Logic, this can be done non-destructively in the Region Inspector for the selected MIDI Region, or via a MIDI plug-in. In the Region Inspector, the Velocity setting provides for a static offset of Velocity values, and the Dynamics setting works in conjunction with that, offering settings that compress or expand the Velocity range around a middle Velocity value of 64; you can tweak these together to scale the Velocity response for the selected MIDI Region(s) to the response of the Virtual Instrument being triggered, adjusting by ear until you've achieved the desired musical/dynamic response. This allows separate settings for different MIDI Regions extracted from the audio, which may be necessary to get the best Velocity response in different musical sections.

Alternatively, you can scale MIDI response for the entire Track/Channel Strip with the Velocity Processor plug-in, instantiated in the MIDI Plug-in area of the Instrument Track. This gives you several ways of scaling the MIDI data—the Add/Scale option is pretty much the same as using the Region Inspector.

Two ways to adjust Velocities in the converted MIDI track

Sustaining Instruments

With sustaining instruments, another dynamic consideration would be level/tone variations applied to a sustaining note by the player after the note's being initially triggered. An example would be a brass player—i.e. trumpet or sax—who plays & holds a note, and performs a subtle swell on the held note, adding level and slightly brightening the tone. This is a performance technique that brass players—as well as performers on other sustaining instruments—typically use, and, unfortunately, it doesn't always translate to MIDI—in particular, Logic doesn't include this aspect of the original performance in the converted MIDI data. Likewise, if there's vibrato in the original performance, this will also not be included in the converted MIDI data.

So it those performance gestures are important to have in the new MIDI-based version of the performance, they'll have to be added after-the-fact, by assigning level and tone (Filter) to a MIDI CC control in the Virtual Instrument, and overdubbing suitable MIDI CC Data/Automation to add that element of the original performance to the new MIDI-based part. This can be a little tweaky, and perhaps outside the technical comfort zone of some producers, but it can help to add expression to the converted part, and since the manually-added swells or variations won't exactly match those in the original recording, they can help to subtly distinguish otherwise-identical parts in the case of an audio+MIDI doubling (case in point: my audio-to-MIDI brass 'section' example above).

Convert Audio To Midi Logic

Wrap Up

Convert Audio To Midi Logic Pro X

Sometimes an audio-to-MIDI conversion goes smoothly, with everything dropping into place, allowing for very quick and easy instrument doublings or substitutions. Other times, a little—or a lot of—tweaking may be required to get musically appropriate, expressive MIDI parts from recorded audio melodies. But once you get used to the process, even an audio-to-MIDI conversion that does need a bit of fine-tuning can still be done efficiently, and could open up a very welcome set of musical/creative opportunities for arranging and production.